New Article Proceedings of the IEEE on Brain-Inspired Learning on Neuromorphic Substrates

New Article Proceedings of the IEEE on Brain-Inspired Learning on Neuromorphic Substrates

New Article Proceedings of the IEEE on Brain-Inspired Learning on Neuromorphic Substrates

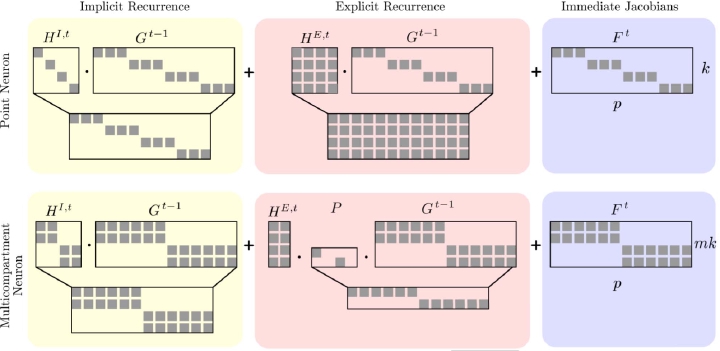

Neuromorphic hardware strives to emulate brain-like neural networks and thus holds the promise for scalable, low-power information processing on temporal data streams. Yet, to solve real-world problems, these networks need to be trained. However, training on neuromorphic substrates creates significant challenges due to the offline character and the required nonlocal computations of gradient-based learning algorithms. This article provides a mathematical framework for the design of practical online learning algorithms for neuromorphic substrates. Specifically, we show a direct connection between RTRL, an online algorithm for computing gradients in conventional RNNs, and biologically plausible learning rules for training SNNs. Furthermore, we motivate a sparse approximation based on block-diagonal Jacobians, which reduces the algorithm’s computational complexity, diminishes the nonlocal information requirements, and empirically leads to good learning performance, thereby improving its applicability to neuromorphic substrates. In summary, our framework bridges the gap between synaptic plasticity and gradient-based approaches from deep learning and lays the foundations for powerful information processing on future neuromorphic hardware systems.

See publication here .